To validate a classification sheet for medication errors associated with antineoplastic medication.

MethodProspective study. A data sheet was designed based on ASHP's classification. Two observers reviewed the treatment prescribed for chemotherapy from the Haematology Department during a month and they classified the errors detected. The interobserver concordance was analysed using the kappa index test. The error categories with a moderate or lower concordance were reviewed, and the need to modify them was evaluated.

ResultsA total of 23 error categories were analysed and 162 lines of treatment were reviewed. Only one of the categories was assessable in accordance with its error prevalence, which was the category for incomplete or ambiguous prescriptions (kappa index=0.458=moderate concordance). The causes were analysed and subsections within this category itemised.

ConclusionOur results proved the need to review error classification. Validated tools need to be made available so as to make progress in characterising this type of medication error.

Validar una hoja de clasificación de errores de medicación asociados a medicamentos antineoplásicos.

MétodosEstudio prospectivo. Se disenó una hoja de recogida de datos sobre la base de la clasificación de la American Society of Health-System Pharmacists. Dos observadores revisaron las líneas de tratamiento de las prescripciones de quimioterapia del Servicio de Hematología durante un mes y clasificaron los errores detectados. Se analizó la concordancia interobservador mediante el test del índice kappa. Se revisaron las categorías de error en las que se obtuvo una concordancia moderada o inferior y se valoró si era necesaria su modificación.

ResultadosSe analizaron un total de 23 categorías de error y se revisaron 162 líneas de tratamiento. Unicamente una de las categorías fue valorable en función de su prevalencia de error, la de prescripción incompleta o ambigua (índice kappa=0,458=concordancia moderada). Se analizaron las causas y se desglosaron subapartados dentro de esta categoría.

ConclusiónNuestros resultados evidenciaron la necesidad de la revisión de la clasificación de errores. Es necesario disponer de herramientas validadas para avanzar en la caracterización de este tipo de errores de medicación.

The prevalence of medication errors (ME) associated with antineoplastic agents is not precisely known and its incidence is difficult to determine.1 Establishing valid comparisons between distinct studies is complicated due to the differences in variables studied, measurements, populations and methodology used.2

Although several ME classifications have been published, such as those of the American Society of Health-System Pharmacists2 and the Ruíz-Jarabo group,3 none of these are specific to antineoplastic treatments. There may be error categories in each classification that are not applicable to this type of medication. Therefore, selecting one classification depends on the scope and purpose for which it is intended.

Regardless of the research study design, validity may be seriously affected if unreliable measurements are used. A significant source of measurement error is its variability when more than one observer is involved (interobserver variability), since the quality of the measurement may be significantly compromised by observer (OBS) subjectivity, which affects the reproducibility of the study.4

Interobserver variability may be assessed through correlation studies, which aim to determine to what extent two OBS agree in their measurements, and thereby identify the causes of discrepancies and attempt to correct them. The statistical way of addressing this problem depends on the nature of the data. When these data are categorical, the most frequently used test is the kappa index.4

The aim of this study is to validate an ME classification sheet associated with antineoplastic medication and to make changes to it, if necessary, based on results obtained from its implementation in the Antineoplastic Medication Unit.

MethodsProspective study, performed in the Pharmacy Department of a tertiary hospital.

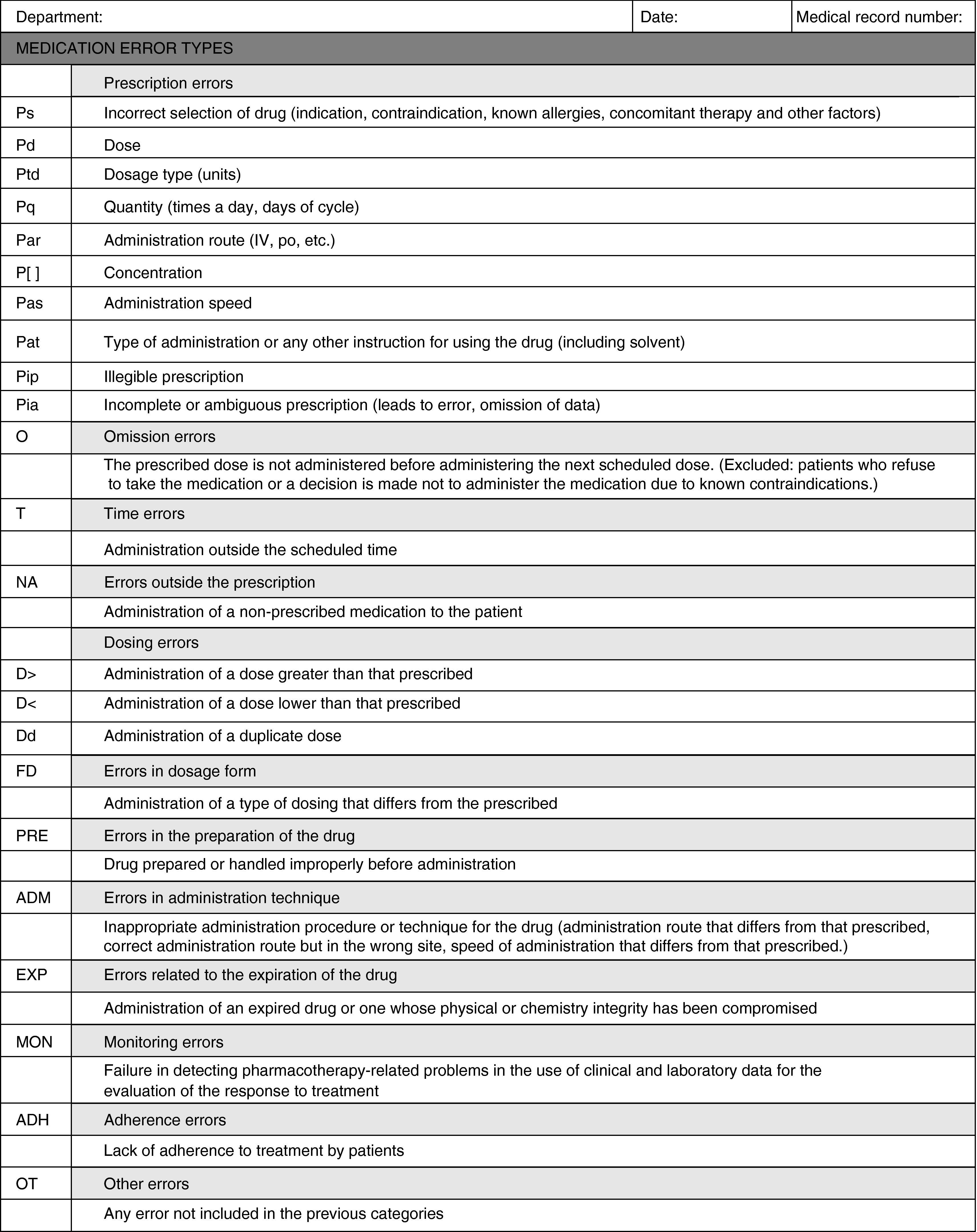

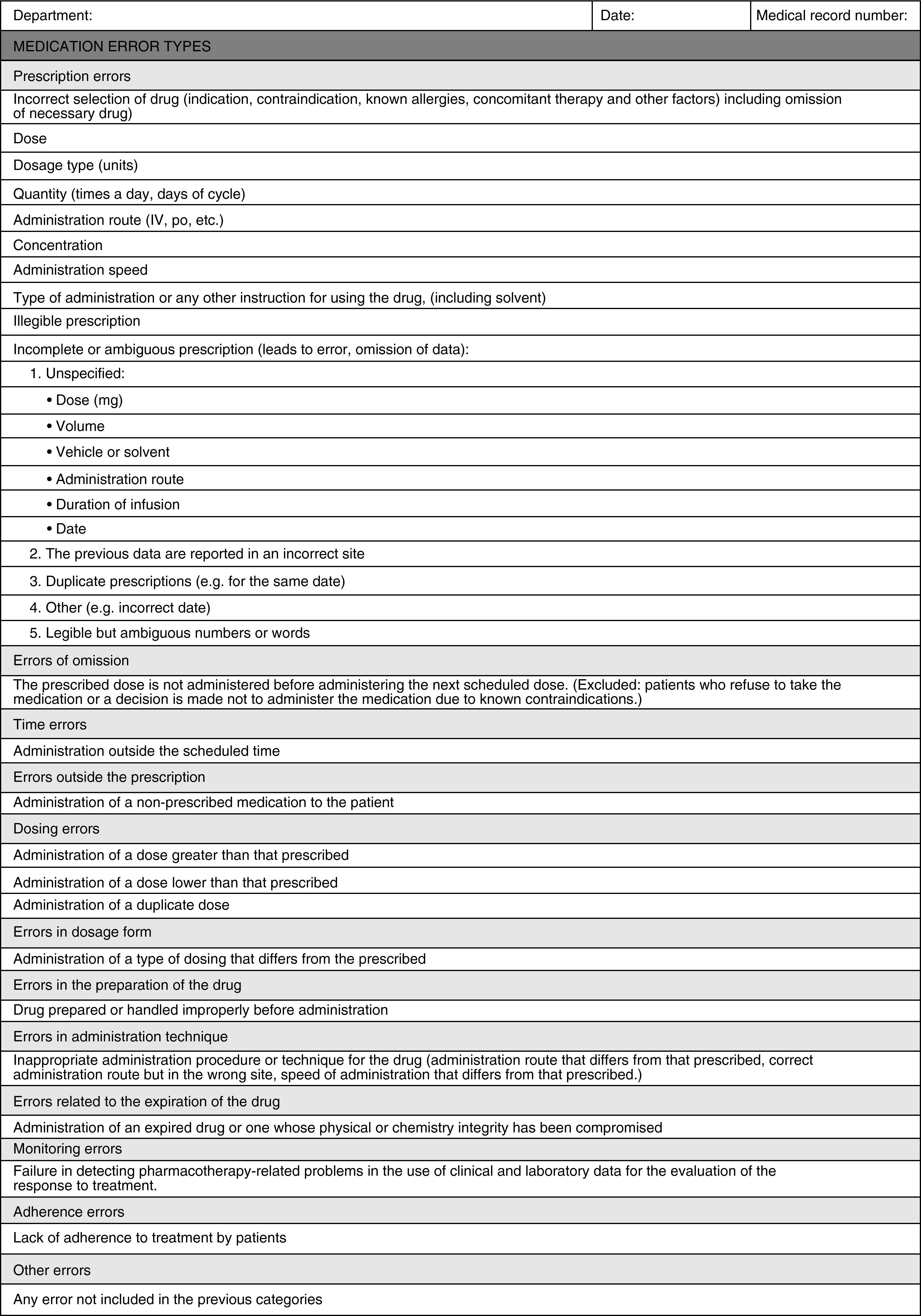

To classify detected MEs, a data collection sheet was designed (Appendix A) based on the ME classification of the American Society of Health-System Pharmacists.

This classification was selected because it is more applicable for categorising errors in routine healthcare practice in this field.

Two properly trained OBS independently reviewed all lines of treatment of chemotherapy prescriptions issued by the Haematology Department for one month. They recorded detected errors in the data collection sheet. Lines of treatment were defined for each oral and parenteral antineoplastic dose which had been prescribed and validated or whose prescription had been modified, as long as the date of administration was within the month of the study. Each line of treatment was considered an observation for the statistical analysis.

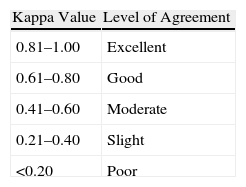

Interobserver agreement was analysed for the classification of detected MEs using the kappa index test. Variables collected in the agreement study included each type of possible ME. The percentage of concordant observations was calculated for each variable (index of observed agreement or simple agreement). The kappa value was equal to 1 if there was complete agreement, 0 if the observed agreement was equal to that expected by chance and less than 0 if the observed agreement was less than that expected by chance.5Table 1 lists the interpretation of kappa values according to Landis and Koch.6

Interpretation of Kappa Values (According to Landis and Koch6).

| Kappa Value | Level of Agreement |

| 0.81–1.00 | Excellent |

| 0.61–0.80 | Good |

| 0.41–0.60 | Moderate |

| 0.21–0.40 | Slight |

| <0.20 | Poor |

The kappa index depends on the prevalence of assessed categories, so that when a category has a high or low prevalence, the kappa index decreases even if the quality of measurement remained constant.5 For this reason, the kappa index only applies to categories whose prevalence of error varies between 10% and 90%. However, although prevalence rates were below 10%, the kappa index was also calculated for categories with greater prevalence of error.

The error categories that obtained moderate or lower agreement were reviewed and an assessment was made as to whether they needed changing.

Statistical analysis of results was performed using the SPSS version 13.0 program.

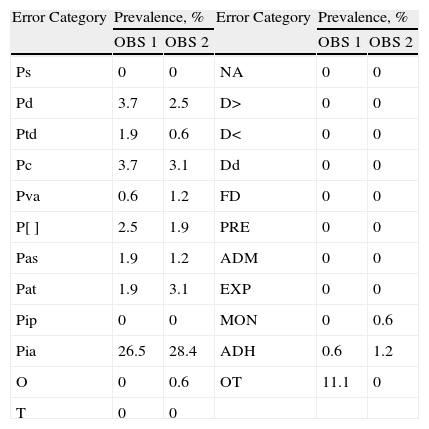

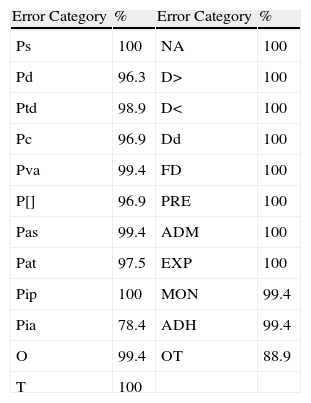

ResultsA total of 23 error categories were analysed. Each OBS reviewed 162 lines of treatment and determined the possibility of error for each line in each category. The prevalence of error for each category according to OBS is listed in Table 2, and the percentages of agreement between the two OBS, which ranged between 78.4% and 100%, are shown in Table 3.

Prevalence of Error by Category.

| Error Category | Prevalence, % | Error Category | Prevalence, % | ||

| OBS 1 | OBS 2 | OBS 1 | OBS 2 | ||

| Ps | 0 | 0 | NA | 0 | 0 |

| Pd | 3.7 | 2.5 | D> | 0 | 0 |

| Ptd | 1.9 | 0.6 | D< | 0 | 0 |

| Pc | 3.7 | 3.1 | Dd | 0 | 0 |

| Pva | 0.6 | 1.2 | FD | 0 | 0 |

| P[] | 2.5 | 1.9 | PRE | 0 | 0 |

| Pas | 1.9 | 1.2 | ADM | 0 | 0 |

| Pat | 1.9 | 3.1 | EXP | 0 | 0 |

| Pip | 0 | 0 | MON | 0 | 0.6 |

| Pia | 26.5 | 28.4 | ADH | 0.6 | 1.2 |

| O | 0 | 0.6 | OT | 11.1 | 0 |

| T | 0 | 0 | |||

ADH: adherence errors; ADM: errors in administration technique; EXP: errors related to the expiration of drugs; D>: administration of dose greater than that prescribed; D<: administration of dose less than that prescribed; Dd: administration of duplicate dose; FD: errors in dosage form; MON: monitoring errors; NA: errors outside the prescription; O: omission errors; OBS: observer; OT: other errors; P[]: prescription of concentration; Pq: prescription of quantity; Pd: prescription of doses; Pat: prescription of administration type or any other instruction for using the drug; Ptd: prescription of the type of dosage; Pia: incomplete or ambiguous prescription; Pip: illegible prescription; PRE: errors in the preparation of the drug; Ps: prescription of incorrectly selected drug; Par: prescription of administration route; Pas: prescription of administration speed; T: time errors.

Percentages of Agreement.

| Error Category | % | Error Category | % |

| Ps | 100 | NA | 100 |

| Pd | 96.3 | D> | 100 |

| Ptd | 98.9 | D< | 100 |

| Pc | 96.9 | Dd | 100 |

| Pva | 99.4 | FD | 100 |

| P[] | 96.9 | PRE | 100 |

| Pas | 99.4 | ADM | 100 |

| Pat | 97.5 | EXP | 100 |

| Pip | 100 | MON | 99.4 |

| Pia | 78.4 | ADH | 99.4 |

| O | 99.4 | OT | 88.9 |

| T | 100 |

ADH: adherence errors; ADM: errors in administration technique; EXP: errors related to the expiration of drugs; D>: administration of dose greater than that prescribed; D<: administration of doses less than that prescribed; Dd: administration of duplicate dose; FD: errors in dosage form; MON: monitoring errors; NA: errors outside the prescription; O: omission errors; OT: other errors; P[]: prescription of concentration; Pq: prescription of quantity; Pd: prescription of doses; Pat: prescription of administration type or any other instruction for using the drug; Ptd: prescription of the type of dosage; Pia: incomplete or ambiguous prescription; Pip: illegible prescription; PRE: errors in the preparation of the drug; Ps: prescription of incorrectly selected drug; Par: prescription of administration route; Pas: prescription of administration speed; T: time errors.

The two OBS detected a prevalence of error between 10% and 90% in only one of the analysed categories, the incomplete or ambiguous prescription (Pia). For this error category, a kappa value of 0.458 (moderate agreement) was obtained. The following categories with greater prevalence of error were: error in prescription of doses and error of prescription of quantity. For the error in prescription of doses, a kappa index of 0.382 (slight agreement) was obtained and the error in prescription of quantity had a kappa index of 0.530 (moderate agreement). Analysis of the causes for the moderate agreement of the Pia category showed that it was a very broad and unspecific category. Therefore, the design of a new sheet was proposed that broke down this category into subcategories (Appendix B). For the other categories, the low prevalence of error precluded the analysis of agreement.

DiscussionIn our field, many studies have been performed on ME in chemotherapy, but very few collect specific criteria for validating the methodology. Although some authors employ previously validated methodologies,7,8 many others report the absence of methodology validation as an inherent limitation of their study.9,10 Garzás-Martín de Almagro et al.11 stressed that the definition of criteria for data collection and the training of staff involved are key aspects for ensuring the internal validity of their study.

The validation of our data collection sheet has demonstrated moderate interobserver agreement. When analysing the causes of these results we found that the category that included Pia errors was a very broad category, which encompassed multiple error possibilities. The lack of agreement in the category defined as other errors was also related to this cause, since an OBS classified as other errors what the other OBS classified as Pia.

Various studies have been published on the validation of a methodology in the hospital pharmacy field, but they focused on the coding of pharmaceutical interventions. Font-Noguera et al.12 analysed the MEs detected and obtained complete agreement in the classification, although their results are not directly comparable with ours since they do not focused on antineoplastic medication or use the same ME classification. Clopés Estela et al.13 obtained overall results regarding the impact of pharmaceutical interventions, with kappa values between 0.7 and 0.8 and confidence intervals of 95% (good agreement), but do not include the analysis of MEs in their study. In contrast, at the international level, the results of Cousins et al.14 showed limited values for agreement, with significant differences between the observations carried out by various pharmacists at different time periods. This leads them to consider the validity of studies of pharmaceutical interventions in which data collection is performed by a single OBS without prior validation of the methodology.

Our study of agreement is, to the best of our knowledge, the only one of its kind in the chemotherapy field, since we have not found similar studies published in this field. We believe that having validated tools is necessary to advance the characterisation of this type of ME.

Although studies of agreement are characterised by simple logistics, simple statistical analysis and broad applicability,5 some authors indicate the limitations in the kappa index15,16 and others suggest alternative methods for quantifying agreement in categorical variables.17,18 The limitations of the kappa index5 include, firstly, the fact that the greater the number of categories, the lower the probability of obtaining an exact agreement. Consequently, the kappa index is highly dependent on the number of categories, decreasing as the number of categories increases. To overcome this problem, specific kappa indices were calculated that carry out an analysis of agreement for each specific error category. Secondly, the kappa index depends on the prevalence of the categories. Our results show a very low prevalence of error, which meant that the kappa index could only be properly interpreted in one of the 23 categories. For the rest, the low prevalence made the agreement by chance weigh heavily. The necessary sample size for overcoming the limitation of low prevalence of error would be too high for our healthcare practice. The completion of this study of agreement in a multicentre manner allowed us to achieve a higher sample size and, therefore, the possibility of an increase in the prevalence of error and a proper analysis of results. Lastly, the kappa index assesses a serious discrepancy and a negligible one equally.

The aim of a study of agreement is not only to check whether variability exists or not, but also to identify the causes of discrepancies found in order to correct them. Our results showed the need for revising the classification of errors used, which led us to design a new data collection sheet. A new pilot study will be performed with the new classification to determine whether the new definition of categories and training in the use of the classification will improve interobserver agreement. With this study, we hope to convert the new classification into a standardised and reproducible tool for classifying MEs regarding antineoplastic agents in our centre.

Conflicts of interestThe authors declare that they have no conflicts of interest.

We wish to thank the Departamento de Estadística (Department of Statistics) of the Hospital Ramón y Cajal for their help in the design of this study and the analysis of the results.

Please cite this article as: Gramage Caro T, et al. Validación de una clasificación de errores de medicación para su utilización en quimioterapia. Farm Hosp. 2011;35(4):197–203.